Ensuring the quality of automatically generated code has always been a tough nut to crack at APIMatic. Code generation engines dynamically generate code; a section of code that appears in a generated SDK might be different or even non-existent in other SDKs. How, then, can the correctness of generated code be ensured when its form is determined by limitless combinations of endpoint configurations, model structures, and code generation settings? In this article, we will share with you our humble beginnings in the world of generated code testing, our current state of affairs, and our future goals and aspirations.

The only way to make sure that the generated code works exactly as expected is to write unit tests for it.

To ensure that a code generation engine is working correctly, it is not enough to only test the code which generates code. Individual components of the engine might be generating pieces of code correctly but it is necessary to test that these pieces of generated code fit together perfectly and work as expected in the form of an SDK. Independent testing of the generated SDK is therefore also required to guarantee quality. But how does one go about doing that? You can compile the generated SDK and the compiler will catch syntax errors for you. But what do you do about logic errors? And how do you test SDKs of interpreted programming languages which necessitate running the code to check for simple syntax errors?

The Past — Manual Testing

The only way to make sure that the generated code works exactly as expected is to write unit tests for it and that is how our initial testing efforts began. We created a comprehensive API description covering the most commonly expected endpoint configurations, model structures, and code generation settings.

A test API, aptly named Tester API, was created against this API description, SDKs were generated and developers manually wrote unit tests to test these SDKs. Whenever changes were made to the code generation engine to add new features or fix bugs, developers regenerated SDKs for the Tester API and ran the unit tests they had previously written.

These unit tests had to be updated whenever the Tester API was improved or added to. When additional test APIs were created to test varying code generation settings or features like API authentication, it started becoming increasingly tedious for developers to maintain unit tests for all of them and manually run them after every small change in the engine. Due to these obvious limitations and scalability issues, this manual approach was quickly discarded in favor of a more automated one.

We design unit tests for our APIs once and leave it up to our code generation engine to generate language specific unit tests.

The Present — Jenkins CI and Automatically Generated Unit Tests

We had been working on adding functionality in our code generation engine to generate unit tests with our SDKs when we realized that it was the perfect opportunity to simultaneously set up a testing server in-house. Given our need to test SDKs of multiple APIs in ten different languages, we required flexibility and power and therefore opted for an on-premise Jenkins installation.

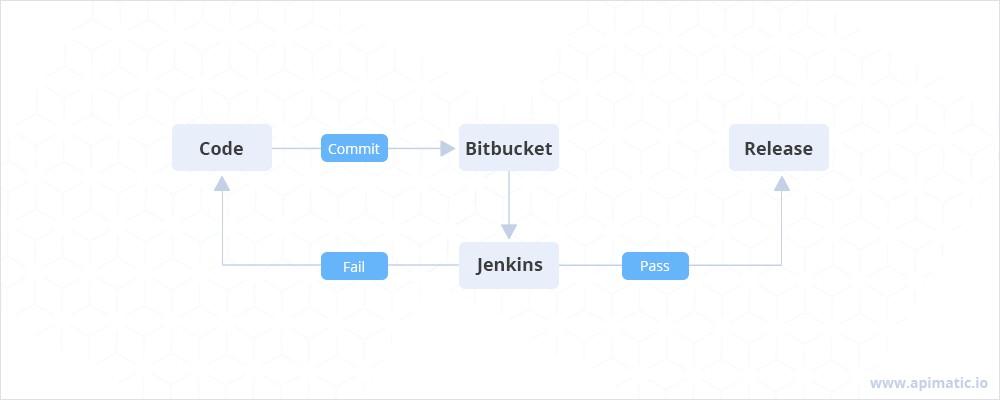

Gone are the days of manually writing and maintaining unit tests for our SDKs; we design unit tests for our APIs once and leave it up to our code generation engine to generate language-specific unit tests. Whenever a developer makes a commit to our code generation engine, our Jenkins server pulls the code from the online repository, compiles the project, runs internal unit tests, generates SDKs of all our supported platforms for all our test APIs, and then runs the unit tests for all these SDKs. The whole automated process, involving testing SDKs of about a dozen APIs, takes about thirty minutes and if all tests pass, the developer is allowed to release his changes.

There are limitations with this approach as well. Even though our code generation engine generates unit tests for us and our Jenkins server runs them, we still have to design these unit tests and add them to the API descriptions ourselves. We also have to manually update the corresponding test APIs when the API descriptions are improved or added to. Furthermore, although we now have a very wide testing coverage for our generated code, we cannot yet guarantee that all corner cases are covered; ideally, every SDK generated by our code generation engine should be automatically tested before reaching the hands of our clients.

Any SDK delivered in the hands of our customers will be guaranteed to work correctly.

The Future — Mock APIs and Automatically Designed Unit Tests

Planning the future of SDK testing at APIMatic has got us all very excited and we have some very cool ideas which we would like to implement as soon as possible. We are aiming to introduce a testing framework that will enable us to achieve 100% test coverage for each and every SDK generated by our code generation engine. Any SDK delivered in the hands of our customers will be guaranteed to work correctly.

This can be achieved by leveraging the power of our code generation engine to create mock APIs which can serve randomly generated static data. To create a mock API, all one needs to know is the structure of the data and the methods of receiving and serving it. This information is completely and very conveniently captured by API descriptions. Once we automatically start creating mock APIs, our code generation engine will be able to not only generate SDK-specific unit tests but also design these unit tests based on the mock API it has created.

We expect that this framework, once implemented, will allow us to actively monitor for faulty SDK generations from our service. We will be able to squash out bugs very quickly without having to wait for bug reports and this shall eventually enable us to deliver SDKs of unprecedented quality to our customers.

We always welcome feedback, comments, and suggestions from our customers. Please reach out if you have any questions or would like to discuss better ways to ensure the quality of our generated code!